By Luca Collina and Roopa Prabhakar

Artificial Intelligence (AI) is transforming our world, driving advancements across various industries. However, as AI becomes more integrated into our daily lives, significant challenges emerge, particularly concerning gender bias. Addressing these biases is crucial for creating fair, effective, and inclusive AI technologies that benefit everyone. This article explores the need to raise awareness about gender bias in AI, discusses case studies highlighting solutions, and emphasizes the consequences of gender biases for individuals and businesses.

First concern: Raising Awareness of Gender Bias in AI

Gender bias in AI is a pressing issue. According to a Deloitte US report1 only 26% of AI jobs worldwide are occupied by women, highlighting a significant gender gap in the field. This underrepresentation leads to AI systems that often fail to recognize and address the needs of women, perpetuating gender biases. For example, recruiting algorithms may disadvantage women due to shorter work experiences, often a result of familial responsibilities, and voice recognition systems may struggle to accurately recognize female voices.2

Intersectionality and AI Bias

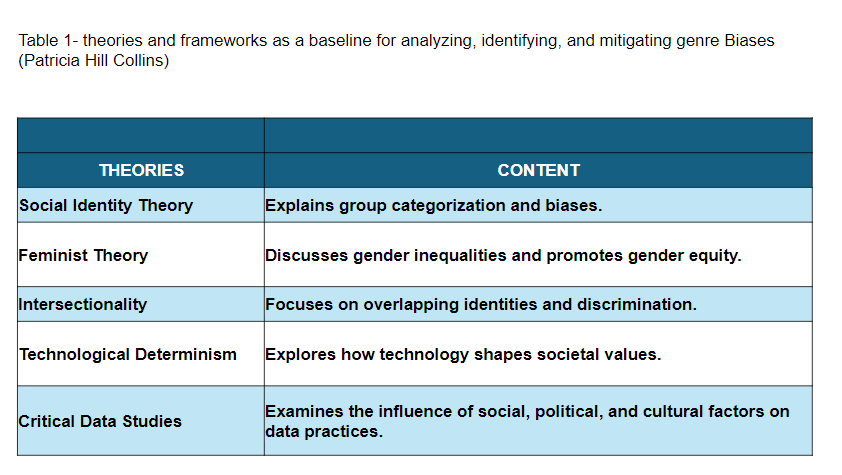

The concept of intersectionality, introduced by Patricia Hill Collins,3 helps us understand how multiple identity markers, such as race and gender, interact to exacerbate biases in AI systems. Intersectionality reveals that women of color, for instance, face compounded biases compared to their white counterparts, leading to higher levels of discrimination in automated decision-making processes. Recognizing these intricate patterns is the first step toward developing fair and inclusive AI technologies.4

Case Studies Highlighting Solutions

- Recruitment Algorithms5 A notable example of gender bias in AI is Amazon’s recruitment algorithm, which was found to be biased against women because it had been trained on predominantly male resumes. Upon identifying this bias, Amazon discontinued the use of the algorithm, illustrating the importance of bias audits and corrective measures in AI development

- IBM’s Hackathons and Mentorship Programs6 IBM has been proactive in fostering inclusivity through initiatives like hackathons and mentorship programs specifically designed for women in tech. These programs not only provide women with valuable opportunities to enhance their skills but also promote a culture of inclusivity and diversity within the tech industry

- Google’s AI Fairness Program7 Google’s AI Fairness program trains its staff to recognize and mitigate biases in AI processes. By incorporating diverse data and inclusive practices, Google aims to reduce the prevalence of gender bias in its AI systems, demonstrating the effectiveness of educational initiatives in promoting gender equity.

- Salesforce’s Inclusive Hiring Practices8. Salesforce focuses on recruiting from underrepresented groups to build diverse AI teams. This approach ensures that AI systems are developed with a broad range of perspectives, which is crucial for creating fair and unbiased technologies. Education and training programs that raise awareness about gender biases and promote inclusive practices among AI practitioners are crucial for long-term change.

- Microsoft’s Continuous Monitoring9 Microsoft implements continuous monitoring and feedback mechanisms to support its efforts in promoting inclusivity. This ongoing assessment helps identify areas of improvement and ensures sustained progress in reducing gender bias

Implications of Gender Bias in AI

The presence of gender biases in AI has profound implications for both individuals and businesses. For individuals, biased AI systems can lead to unfair treatment and missed opportunities, particularly for women and other underrepresented groups. In the business context, gender bias can result in reduced innovation, poor decision-making, and a lack of trust in AI technologies.10

Impacts on Individuals

Biased AI systems can perpetuate stereotypes and discrimination, leading to unequal access to job opportunities, healthcare, and other essential services. Biased AI systems can lead to unfair treatment in areas such as hiring, healthcare, and finance, exacerbating existing inequalities for example, AI tools in healthcare may misdiagnose conditions in women due to male-biased data, resulting in inadequate treatment and poorer health outcomes.11

Impacts on Businesses12

Businesses that fail to address gender bias in their AI systems risk alienating a significant portion of their customer base, which can lead to reputational damage and financial losses. legal repercussions, and missed opportunities for innovation and market growth Moreover, companies that prioritize diversity and inclusivity tend to outperform their peers, as diverse teams bring a wider range of perspectives and ideas, driving innovation and creativity.

Conclusion?

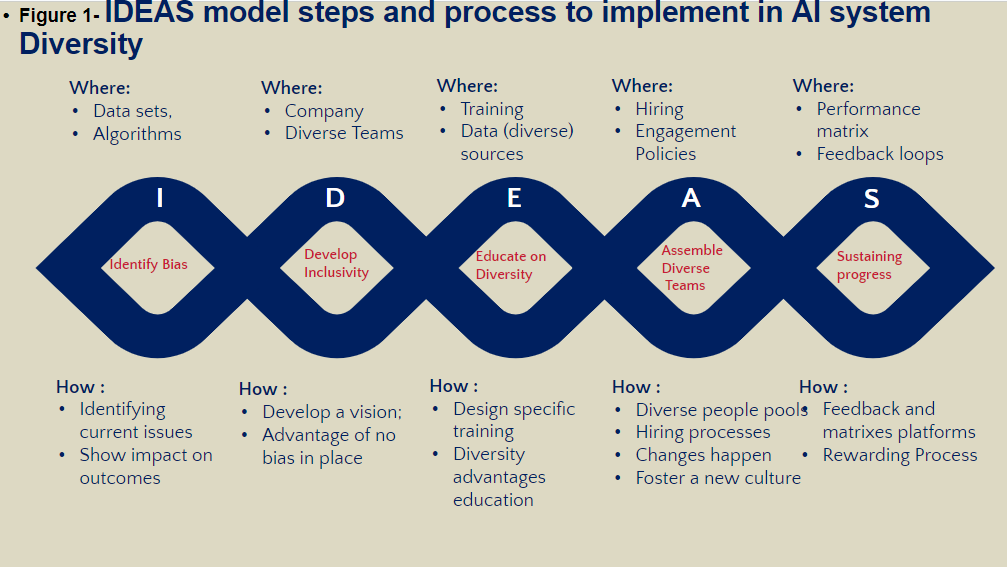

While addressing gender bias in AI is the primary focus here, it must be considered a transition from analysis to action that requires a detailed guide and roadmap, inspired by elements of change management: IDEAS © – Identity Bias- Develop Inclusivity- Educating on Diversity. Assemble Diverse Teams. Sustain Progress(Luca Collina MBA -Roopa Prabhakar ©)

Stay tuned for more information on the steps and processes to follow!

Stay tuned for more information on the steps and processes to follow!

All the photos in the article are provided by the author(s) mentioned in the article and are used with permission.

About the Authors

Roopa Prabhakar holds a master’s degree in Electronics & Communication Engineering and has over 20 years of experience in data & analytics and currently serves as a Global Business Insights Leader at Randstad Digital. She specializes in modernizing & migrating legacy technology towards AI-enabled systems, bridging traditional IT roles with AI-powered functions. As an independent researcher, Roopa focuses on gender bias in AI, informed by works from UN Women and the World Economic Forum. She is actively building a “Women in AI “community and champions “Women in Digital initiatives” within her company, aiming to increase female representation in AI and provide support for women in technology. Roopa is passionate about promoting ethical practices that address historical biases against women and continues to upgrade her skills to contribute positivity to the AI landscape.

Roopa Prabhakar holds a master’s degree in Electronics & Communication Engineering and has over 20 years of experience in data & analytics and currently serves as a Global Business Insights Leader at Randstad Digital. She specializes in modernizing & migrating legacy technology towards AI-enabled systems, bridging traditional IT roles with AI-powered functions. As an independent researcher, Roopa focuses on gender bias in AI, informed by works from UN Women and the World Economic Forum. She is actively building a “Women in AI “community and champions “Women in Digital initiatives” within her company, aiming to increase female representation in AI and provide support for women in technology. Roopa is passionate about promoting ethical practices that address historical biases against women and continues to upgrade her skills to contribute positivity to the AI landscape.

Luca Collina’s background is as a management consultant He has managed transformational projects, also at the international level (Tunisia, China, Malaysia, Russia). He now helps companies understand how GEN-AI technology impacts business, use technology wisely, and avoid problems. He has an MBA in Consulting, has received academic awards, and was recently nominated for Awards 2024 by the Centre of Management Consultants for Excellence. He is a published author. Thinkers360 named him one of the Top Voices, Globally and in EMEA in 2023, and currently is among the 10# thought leaders in Gen-AI and 1# in Business continuity. Luca continuously upgrades his knowledge with experience and research to transfer it. He is ready to launch the interactive courses on “AI & Business” In September 2024.

Luca Collina’s background is as a management consultant He has managed transformational projects, also at the international level (Tunisia, China, Malaysia, Russia). He now helps companies understand how GEN-AI technology impacts business, use technology wisely, and avoid problems. He has an MBA in Consulting, has received academic awards, and was recently nominated for Awards 2024 by the Centre of Management Consultants for Excellence. He is a published author. Thinkers360 named him one of the Top Voices, Globally and in EMEA in 2023, and currently is among the 10# thought leaders in Gen-AI and 1# in Business continuity. Luca continuously upgrades his knowledge with experience and research to transfer it. He is ready to launch the interactive courses on “AI & Business” In September 2024.

References

- Deloitte, 2023. Deloitte Women in AI. [online] Available at: https://www.deloitte.com/global/en/about/people/people-stories/deloitte-women-in-ai.html [Accessed 17 July 2024].

- O’Connor, S., Liu, H. (2023) ‘Gender bias perpetuation and mitigation in AI technologies: challenges and opportunities’, AI & Soc.

- Collins, P.H., 2019. Intersectionality as Critical Social Theory. Durham, NC: Duke University Press. Available at: https://www.dukeupress.edu/intersectionality-as-critical-social-theory [Accessed 17 July 2024]

- González, A.S., Rampino, L. (2024) ‘A design perspective on how to tackle gender biases when developing AI-driven systems’, AI Ethics.

- Amazon, 2023. How Amazon Leverages AI and ML to Enhance the Hiring Experience for Candidates. Available at: https:/www.aboutamazon.com/news/workplace/how-amazon-leverages-ai-and-ml-to enhance-the-hiring-experience-for-candidates [Accessed 24 July 2024].

- IBM, 2023. IBM Pathfinder Mentoring Program. Available at https:/mediacenter.ibm.com/channel/IBM%2BPathfinder%2BMentoring%2BProgram/156911071 [Accessed 18 July 2024]

- Google, 2023. Introduction to Responsible AI. Available at: https:/developers.google.com/machine learning/resources/intro-responsible-ai [Accessed 17 July 2024].

- Salesforce, 2023. Develop Inclusive and Accessible Hiring Policies. Available at: https:/trailhead.salesforce.com/content/learn/modules/inclusive-and-accessible-hiring policies/develop-inclusive-and-accessible-hiring-policies [Accessed 20 July 2024]

- PwC Netherlands, 2023. Continuous Monitoring Platform. [Available at: https:/appsource.microsoft.com/en-us/product/web-apps/pwcnetherlands.cmp?tab=overview [Accessed 20 July 2024]

- UN Women (2024) ‘Artificial intelligence and gender equality’. Available at: https://www.unwomen.org/en/news-stories/explainer/2024/05/artificial-intelligence-and-gender-equality (Accessed: 20 July 2024).

- Marsh McLennan, 2020. How Will AI Affect Gender Gaps in Health Care? Available at https://www.marshmclennan.com/insights/publications/2020/apr/how-will-ai-affect-gender-gaps-in health-care-.html [Accessed 20 July 2024].

- Makinde, O.D., Babatunde, B.A., Fadiran, O.O., Adewumi, A.O. and Oladele, O.I., 2023. Assessment of novel bioresource materials for mitigating environmental pollution. Sustainable Environment Research