By Luca Collina and Roopa Prabhakar

Off we go again – another explanation about the AI

AI is now the focus of technology world with its pervasive influence. Technology is driving business growth and labour markets, and changing job processes in firms. Like it or not, AI has proved to stay.

There is an increasing number of organisations that are leveraging on this trend to make AI help them work faster and smarter. It is fast transforming industries.

So, artificial intelligence matters; it changes everything without officials’ consent. Let us now proceed with a clarifying l analysis of BIASES.

Defining AI Bias

There are several definitions of AI biases, many of which have already been discussed in business and academia. The aim here is to classify them based on their origin and impact on AI. It helps to understand the root cause and later to see what not-specialists have available to check and monitor.

AI bias is the concept in which AI systems render unfair decisions due to various factors. This occurs because either the data used to train an AI is inherently unfair or the individuals responsible for creating it are motivated by prejudice. In case of any form of bias is detected with respect to some aspect relating to an individual element of our lives, there exist certain deep-rooted prejudices among us that can be seen and detected across different levels throughout society, culminating in structural inequalities if not injustices themselves. This is a reason why two types of root causes are highlighted:

Social (Systemic Inequalities) Sources

Social hierarchies and inequalities

Technical (Data) Sources;

As for technical bias, the data sets used with ML for training carry historical, representation, and measurement biases and systemic inequity.

The combination is called Socio-technical biases.

Rationale for Addressing Biases

Here is a quick reminder about the type of biases in AI it is worth showing:

- Measurement bias occurs when incorrect data are employed to comprehend a particular issue. For example, when deciding who can progress in life, if we only consider their financial ability, we might be wrong because wealth is not the only factor that determines success. (Lopez, 2021).

- Aggregation Bias is when all the information is put together, and details about minorities can be overlooked. For example: you might not learn about abject poverty in a whole country if you examine just this population as entire. (ibid)

- Learning Bias: The AI learns from its prior knowledge. When this prior knowledge contains unjust ideas, the AI can still adopt these new ideas. In such cases, this does not do away with old problems. (Blatz, 2021)

- Labelling Bias: Misclassification bias refers to any mistake in giving labels to information. It is possible that people who are responsible for labelling may either make errors or impose their personal opinions when performing this task, which results an AI can employ these inappropriate tags for decision-making. (Jackson, 2024)

- Selection Bias happens when there is not enough representation of some subjects in the data used for teaching artificial intelligence. For example, although face scanning systems work well on people with white skin, others who have black skin might not be scanned correctly because they were not sufficiently sampled into the system. (Manyika, Silberg and Presten, 2019)

Implications of AI Biases

Problems of AI Bias from an Ethical Perspective

One of the greatest challenges facing AI is bias. AI can be discriminative, disadvantaging many individuals, which is worse, especially during important decision-making processes like job recruitment or healthcare provision. Fairness demands that no man should be preferred over another in this context because it all goes back and supports old unfair scripts. Such institutions whose systems are flawed using it may encounter legal skirmishes with them being taken to court.

Influence of AI Bias on Community Development and Wealth Creation

Unjust AI has negative implications on society as well as economics. It becomes difficult for these individuals to live when they experience such challenges. These include acquiring education on an employment basis or even providing good health facilities within their regions. When AI keeps on repeating past errors about persons’ identification, it normalises discrimination, resulting in mistrust towards it. That way, people ended up not trusting AIs even more.

Concrete Cases of AI Bias

For instance, a woman could not get the same job opportunities she deserved as men do because, at some point, a certain artificial intelligence system discriminated against her. On one occasion, AI rejected minority groups’ loan applications more often than they did for other categories. Others were not as effective in diagnosing some medical conditions among patients from specific ethnicities, which led to more sick people (unfortunately, to be continued).

Approaches to Bias Mitigation in AI -Technical Solutions

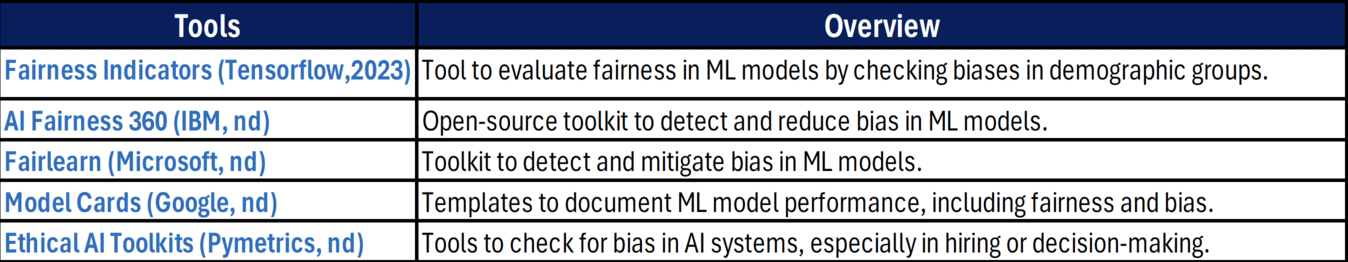

Fortunately, there are tools that can aid in fixing it. The tools are designed to detect and mitigate bias in AI systems.

Below is a list of some of the tools. They have been built user-friendly to facilitate usage by varied individuals, including data professionals, researchers and consultants. A few check whether AI treats all users fairly, while others assist in reducing bias within an AI model. There are also tools for understanding fairness in both design procedures as well as outcomes.

They utilise various approaches to improving AI systems.

Tools Overview

- Fairness Indicators (Tensorflow,2023) Tool to evaluate fairness in ML models by checking biases in demographic groups. AI Fairness 360 (IBM, nd) Open-source toolkit to detect and reduce bias in ML models. Fairlearn (Microsoft, nd) Toolkit to detect and mitigate bias in ML models.

- Model Cards (Google, nd) Templates to document ML model performance, including fairness and bias. Ethical AI Toolkits (Pymetrics, nd) Tools to check for bias in AI systems, especially in hiring or decision-making.

AI Literacy: Education and Practical Training

While technical tools are important, they cannot be fully effective without widespread AI literacy. This crucial aspect involves:

Education:

- Understanding the fundamentals of AI and machine learning

- Recognizing different types of bias and their sources

- Learning to critically evaluate AI systems and their outputs

- Developing skills to interpret AI decisions and their potential impacts • Fostering an ethical mindset in AI development and deployment Practical Training:

- Hands-on experience with bias detection and mitigation tools

- Case studies and real-world scenarios

- Ethical decision-making exercises in AI development

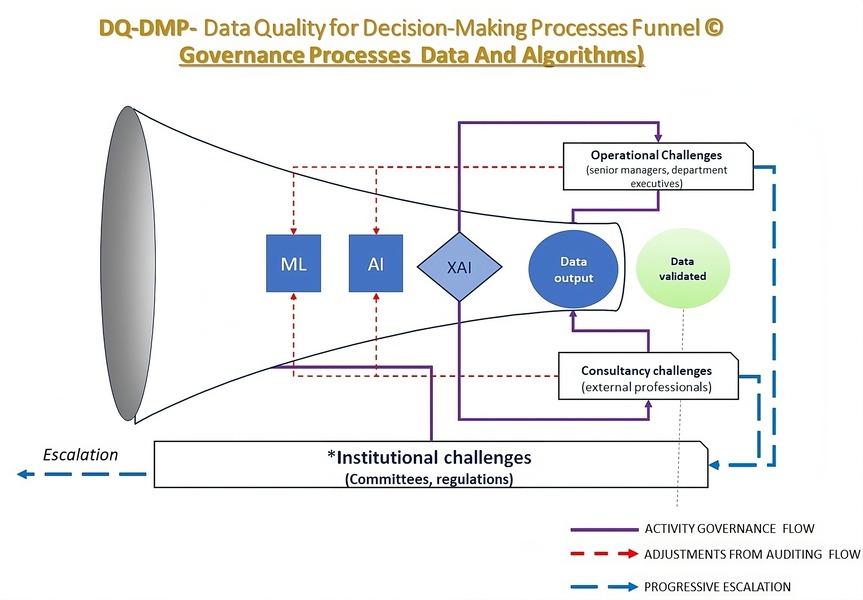

Ongoing Monitoring and Auditing

Implementing regular audits and continuous monitoring of AI systems to detect and address bias over time is GOVERNANCE. We proposed a model called DATA QUALITY FUNNEL ©, where algorithms and output monitoring can be organised within companies and with external consultants too, including Institutional Challenges– Risk assessments and Monitoring (Consultancy challenges and operational Challenges)

“Institutional Challenging: Institutions, by creating committees, including AI specialists and non-executive directors, may establish overarching rules to guide decisions with both artificial intelligence technology and human expertise.

Consultancy Challenging: These challenges may be tackled by external professionals who utilise critical assessment to produce more substantial and sustainable outcomes through independent and impartial opinions.

Operational Challenging: These challenges are for the operations staff who watch directly how the AI systems work on tasks. They can run checks and raise issues about problems to rectify algorithms and improve them through an escalation process, but they don’t intervene in modifying the algorithms.” (Collina, Sayyadi & Provitera, 2024)

Summing up

In order to efficiently mitigate bias in AI, companies should incorporate AI education within their main operations and put up comprehensive structures governing the technology. The future of AI does not exclusively depend on its level of technical advancement but also on our capacity for responsible and ethical governance.

It intimidates developing a stage where AI literacy becomes part of an organisation by truth and life employees can use to question AI systems critically (analyse tricklingly) from within addressing its source due to this environment norm.

The Internal regulations and accountability systems form the foundation of this process while ensuring that bias is proactively identified and corrected requires transparent procedures for monitoring and auditing AI decisions. Businesses should also ensure that there is always an attitude of “continuous improvement” within themselves where evaluation of technical measures cannot be used.

AI Literacy Programs

Make AI literacy programs the norm: have regular employee training sessions on AI basics, including its ethical connotations and how to deal with bias in the workplace.

Internal Governance Structures

The establishment of a system of governing similar to the Data Quality Funnel® that incorporates continuous bias audits as well as persistent monitoring ought to be developed.

Continuous Feedback Loops

It is better to use both external consultants and internal monitoring and feedback systems, which refine AI systems in real-time, thus making them robust over time.

By marrying AI literacy with strong internal governance mechanisms, organisations can move beyond just addressing personal prejudices to becoming pioneers of responsible AI innovation.

About the Authors

Roopa Prabhakar holds a master’s degree in Electronics & Communication Engineering and has over 20 years of experience in data & analytics and currently serves as a Global Business Insights Leader at Randstad Digital. She specializes in modernizing & migrating legacy technology towards AI-enabled systems, bridging traditional IT roles with AI-powered functions. As an independent researcher, Roopa focuses on gender bias in AI, informed by works from UN Women and the World Economic Forum. She is actively building a “Women in AI “community and champions “Women in Digital initiatives” within her company, aiming to increase female representation in AI and provide support for women in technology. Roopa is passionate about promoting ethical practices that address historical biases against women and continues to upgrade her skills to contribute positivity to the AI landscape.

Roopa Prabhakar holds a master’s degree in Electronics & Communication Engineering and has over 20 years of experience in data & analytics and currently serves as a Global Business Insights Leader at Randstad Digital. She specializes in modernizing & migrating legacy technology towards AI-enabled systems, bridging traditional IT roles with AI-powered functions. As an independent researcher, Roopa focuses on gender bias in AI, informed by works from UN Women and the World Economic Forum. She is actively building a “Women in AI “community and champions “Women in Digital initiatives” within her company, aiming to increase female representation in AI and provide support for women in technology. Roopa is passionate about promoting ethical practices that address historical biases against women and continues to upgrade her skills to contribute positivity to the AI landscape.

Luca Collina’s background is as a management consultant He has managed transformational projects, also at the international level (Tunisia, China, Malaysia, Russia). He now helps companies understand how GEN-AI technology impacts business, use technology wisely, and avoid problems. He has an MBA in Consulting, has received academic awards, and was recently nominated for Awards 2024 by the Centre of Management Consultants for Excellence. He is a published author. Thinkers360 named him one of the Top Voices, Globally and in EMEA in 2023, and currently is among the 10# thought leaders in Gen-AI and 1# in Business continuity. Luca continuously upgrades his knowledge with experience and research to transfer it. He is ready to launch the interactive courses on “AI & Business” In September 2024.

Luca Collina’s background is as a management consultant He has managed transformational projects, also at the international level (Tunisia, China, Malaysia, Russia). He now helps companies understand how GEN-AI technology impacts business, use technology wisely, and avoid problems. He has an MBA in Consulting, has received academic awards, and was recently nominated for Awards 2024 by the Centre of Management Consultants for Excellence. He is a published author. Thinkers360 named him one of the Top Voices, Globally and in EMEA in 2023, and currently is among the 10# thought leaders in Gen-AI and 1# in Business continuity. Luca continuously upgrades his knowledge with experience and research to transfer it. He is ready to launch the interactive courses on “AI & Business” In September 2024.

References

- Blatz, J. (2021). Using Data to Disrupt Systemic Inequity. Stanford Social Innovation Review. Available athttps://ssir.org/articles/entry/using_data_to_disrupt_systemic_inequity [Accessed 8 Sep. 2024

- Collina, L., Sayyadi, M. & Provitera, M., 2024. The new data management model: Effective data management for AI systems. California Management Review. Available at: https://cmr.berkeley.edu/2024/03/the-new-data-management model-effective-data-management-for-ai-systems/ [Accessed 04 Sept. 2024].

- Google (nd) Model Cards. Available at: https://modelcards.withgoogle.com/about (Accessed: 9 September 2024). 4. IBM (nd) AI Fairness 360. Available at: https://aif360.res.ibm.com/ Accessed: 9 September 2024

- Jackson, A. (2024). The Dangers of AI Bias: Understanding the Business Risks. AI Magazine. Available at: https://aimagazine.com/machine-learning/the-dangers of-ai-bias-understanding-the-business-risks [Accessed 9 Sep. 2024].

- Lopez, P. (2021). Bias does not equal bias: a socio-technical typology of bias in data-based algorithmic systems. Internet Policy Review,[online] 10(4). Available at: https://policyreview.info/articles/analysis/bias-does-not-equal-bias-socio technical-typology-bias-data-based-algorithmic [Accessed: 7 Sep. 2024].

- Manyika, J., Silberg, J. and Presten, B., 2019. What AI can and can’t do (yet) for your business. Harvard Business Review. Available at: https://hbr.org/2019/01/what-ai-can-and-cant-do-yet-for-your-business [Accessed 10 Sep. 2024].

- Microsoft (nd) Fairlearn: A toolkit for assessing and improving fairness in AI. Available at: https://fairlearn.org/ (Accessed: 9 September 2024).

- Pymetrics (nd) Audited & Ethical AI. Available at: https://www.pymetrics.ai/audited-ethical-ai (Accessed: 9 September 2024).

- TensorFlow (2023) Fairness Indicators. Available at: https://www.tensorflow.org/tfx/guide/fairness_indicators (Accessed: 9 September 2024).